I’m a programmer by training, but have also spent time as a technical artist, game designer and medical illustrator, developing a wide array of creative skills. I am always excited to learn about new fields, to integrate them into my worldview and employ them to solve problems.

I’m at home when working with novel inputs like hand and eye tracking, electromyography and nonverbal vocal input - to target positional, dynamic synthesized audio, procedural graphics, vibrotactile output and anything else I can get my hands on, and to see how the totality of experience can be assimilated and synthesized into a sense of something going on.

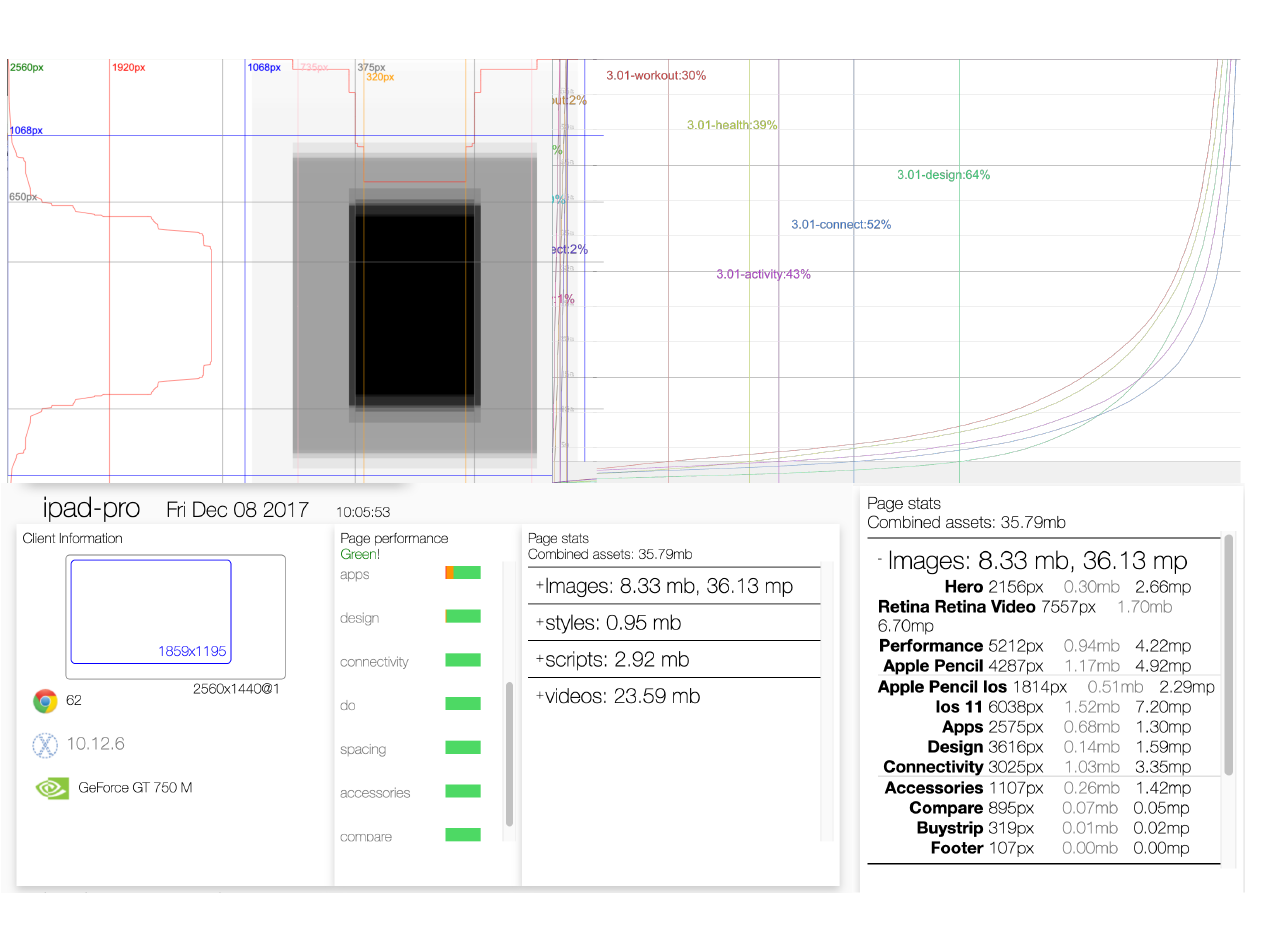

When thinking professionally about the experience of our customers and their amazing products, it’s essential to consider the messy reality that those experiences happen within. In order to give our team a better grounding for Apple.com, for instance, I worked with Legal & Privacy to collect information about client devices to understand responsible parameters to work within. I built rich, interactive visualization tools to work with that data, and established simple, clear descriptions of the patterns we discovered. I built motion analysis to identify customer context and environment, and am developing a ‘mobile preview’ mode that helps remind developers not just of the site they are building and the device it runs on, but the complex reality that the device and its user resides within.

The most interesting questions are the ones that are difficult to ask in the first place. I strive to understand not just the broader context of contemporary use of technology but its deeper history, to fully grasp the social and political dimensions as well as the technical. My current personal project involves AR/VR word processing, and has taken me through cognitive philosophy, political science and linguistics to a fascinating perspective that I’m excited to develop further.

I’m electrified by the possibilities of the short-and-mid term future and love to share that energy with the people around me. I am a frequent mentor at work, contribute regularly to the Near Future Laboratories’ office hours and have developed friendships with many professionals and academics around the domain. I have hosted over a dozen workshops and tutorials within Apple on creative/technical subjects like Illustrator, 3D modeling and software architecture, and am a regular in Apple’s design meetups and Slack space, providing valuable guidance and feedback for the community.

I have been looking for an organization with both the technical skill to prototype the future of digital experiences, and the grounding in philosophy and the humanities to understand the consequences that flow from them.

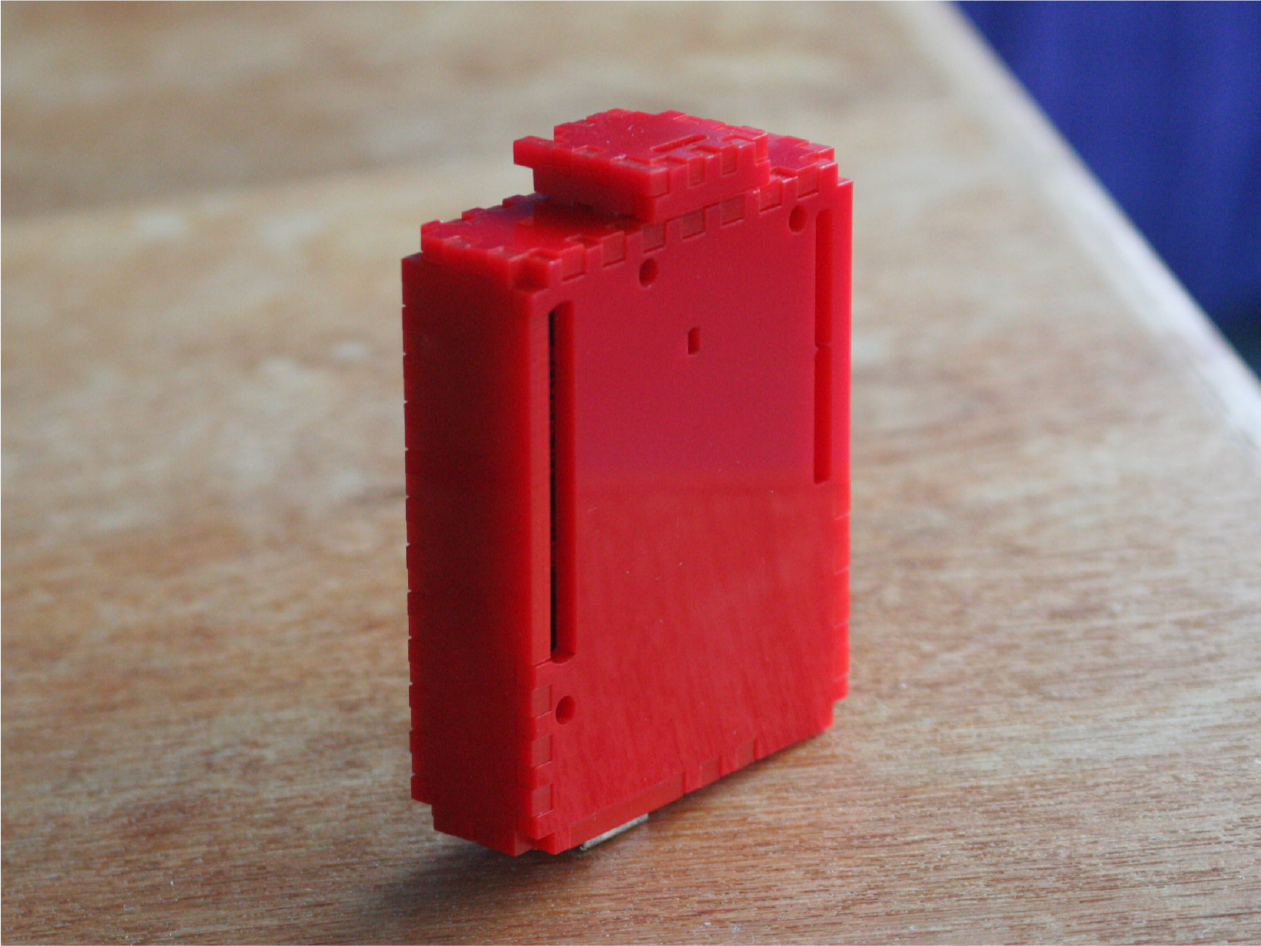

While the limitations of static media are what drive us toward digital ones, it’s important to recognize the meaning we glean through the technology and culture of books we have developed over the millenia. In order to consider this, I built a series of prototypes to demonstrate more aspects of what books are - the visual, textural qualities of book covers and conditions, the tangible sense of a text we get from seeing its size and our current position in it, and to explore mechanisms to give more developers a better capacity to explore them as well.

After seeing the gorgeous page for Stripe press, I wanted to build a procedural generator to think about the range of options present to construct a real book, and what psychological impacts they might have. Beyond just reproducing high-fidelity representations of books, I am excited by the opportunity to drive the many choices for presentation with meaningful attributes about the data underpinning it - e.g. the cost of a document, the age of the information or country of origin may be subtly reflected in its appearance - in addition to more explicit disclosure, this has the opportunity for users of a system to recognize those cues and learn those correspondences for richer interaction.

While the previous exploration was interactive in that it can create many book appearances, it still can’t support the interactions of a physical book. I wanted to create a book that could open, to turn the pages and possess all the tangible landmarks that we associate with real books. I find that by being reminded of the intrinsic qualities of printed documents - the size and shape of the spine, the bend of the books, I am struck by the shallowness of our current digital representations of documents, and drawn to finding more immediate and expressive ways of portraying more about our relationship to them on computers.

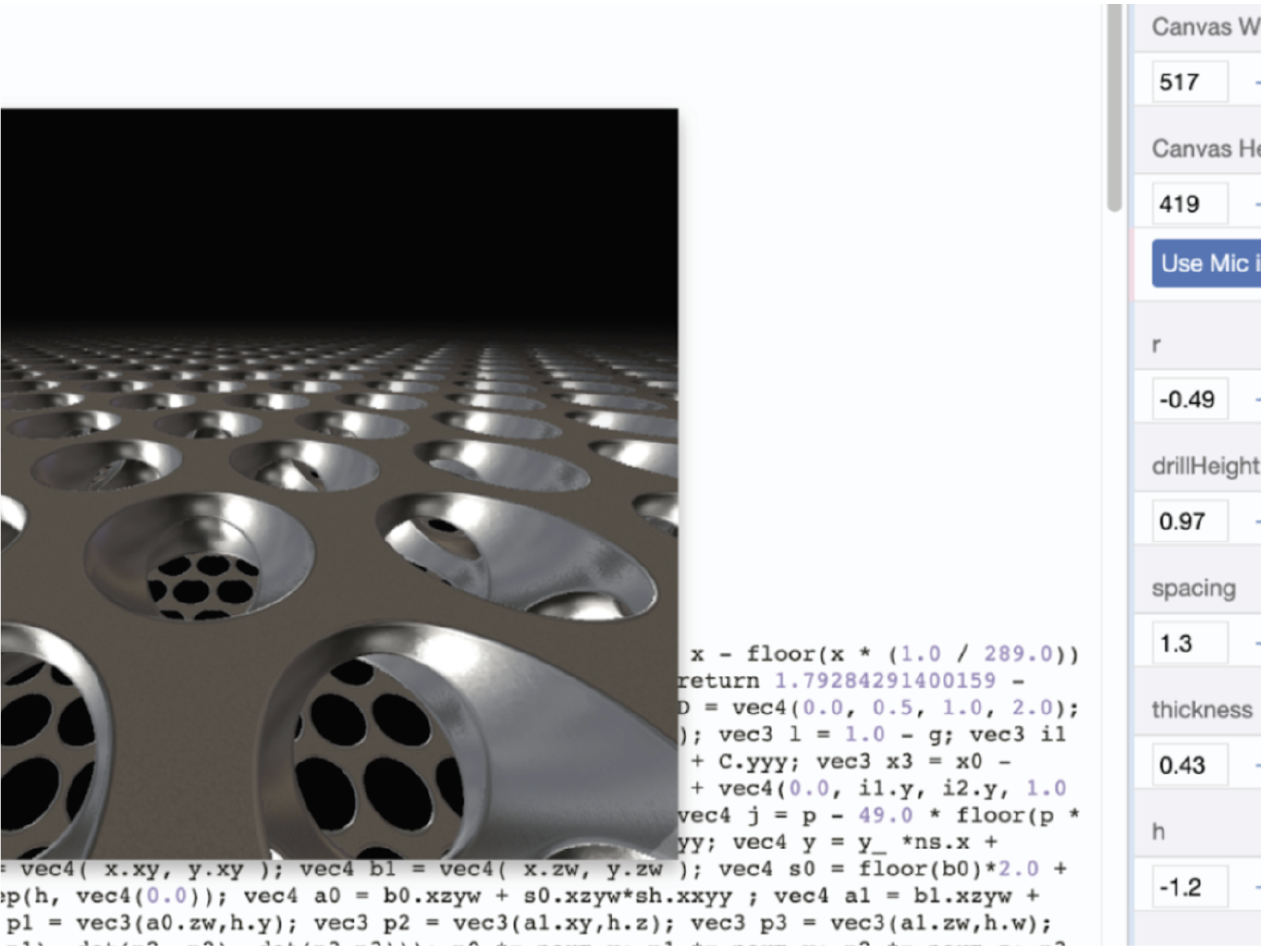

While I believe that 3D graphics are transformative in the context of software experience, a skill gap will remain between people accustomed to traditional 2D development and the graphics techniques required to make the most of the new medium. By finding ways to use older methods to make new materials we can narrow the gap and include more developers in finding the best shape of interface design for the future.

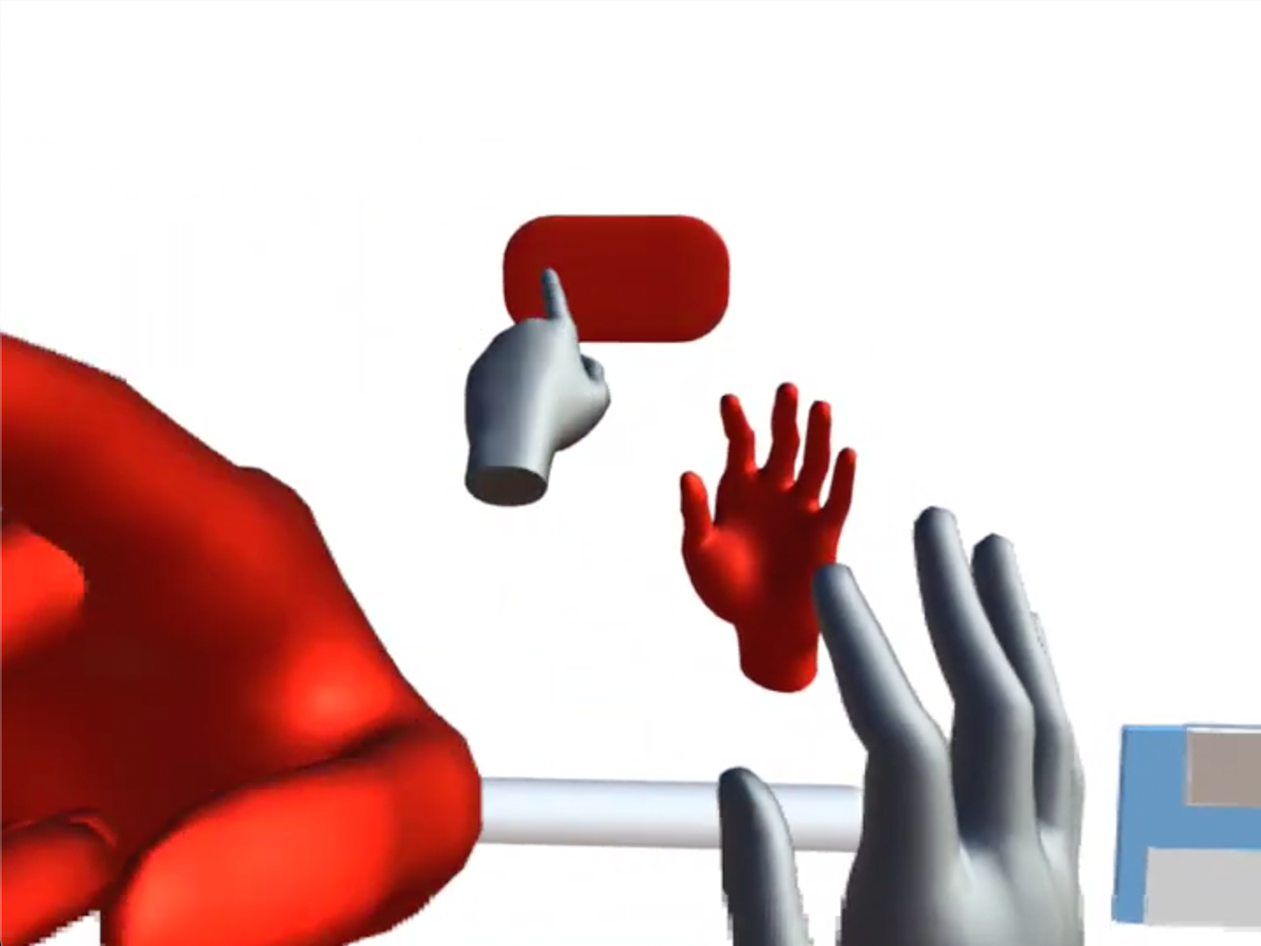

Computing has a history of setting up users for failure by providing pedantic input environments and keeping the results of activity at arm’s length. Building truly interactive spatial systems means producing immediate, meaningful feedback from exploratory, bodily actions on a system.

While this was partly a technical stepping stone toward understanding copresent webVR, I am excited to provide an environment for people to safely interact with themselves and feel the consequences. It also allows the easy performance and retrieval of hand motion for 3D modeling and further prototyping!

This project was initially motivated by a desire to do the most boring thing with the most exciting technology available. However, I soon realized that “the basics” of reading and writing text constitute the bulk of most digitally-mediated work worldwide, so improving that experience may be the most impactful work in all of computing! The basic metaphor of ‘word processor’ is more focused on creating printable documents than structuring ideas. If we can find alternative presentations to conduct the same tasks, we are better positioned to understand what each of them achieves.

An exploration of how a user’s hand pose could be responsible for encoding the state of the system. In the same way that different tool grips facilitate different functions, being able to access different functions with different gestures is more appealing than nearly identical taps and clicks. I also like the ‘pose-distance’ feedback to indicate when the user’s hand is near certain functional poses, as the feedback helps train the user how to use the system.

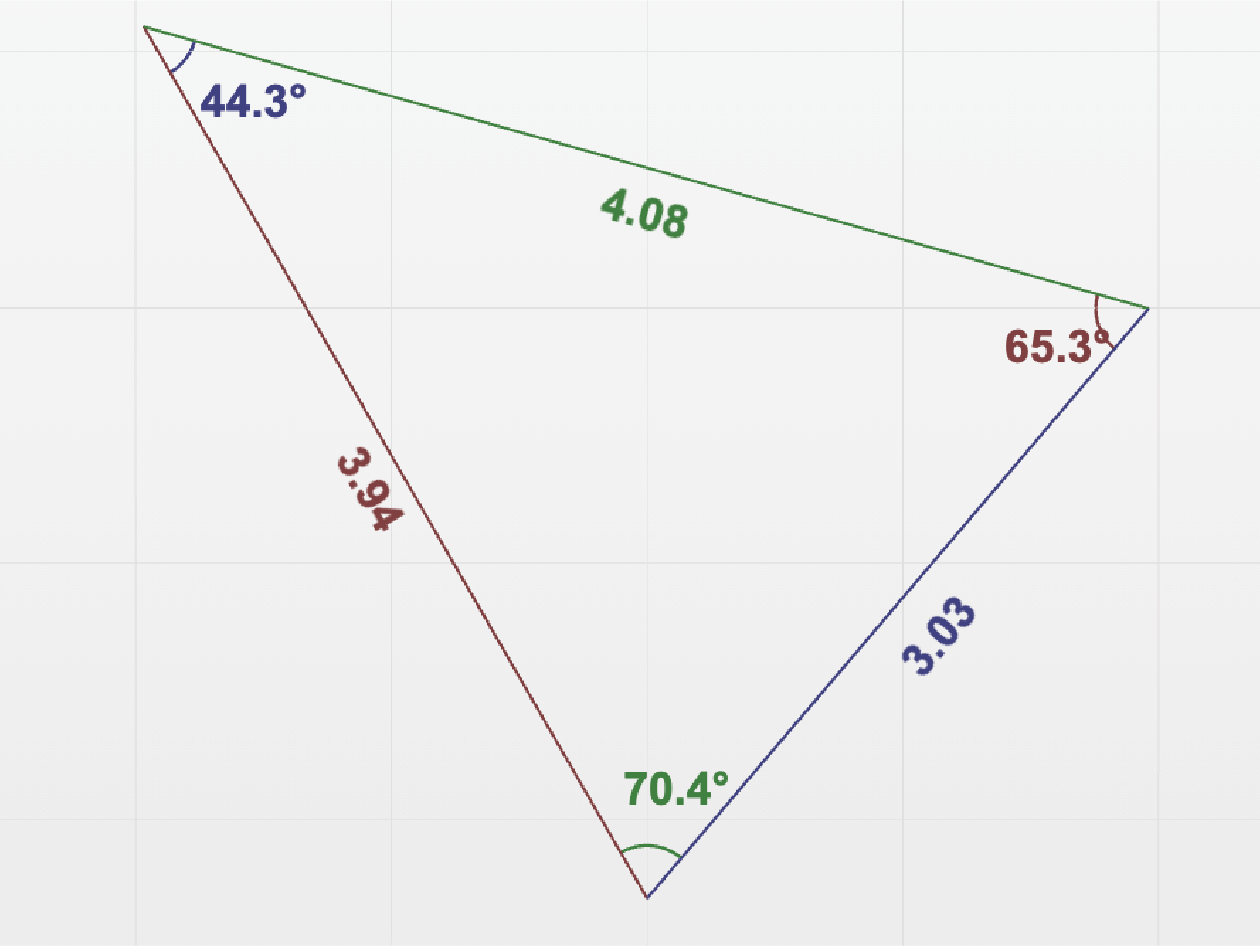

A simple sketch to pull apart the concepts of calculations, numbers and numeral-based representations. While the digital calculator has enabled incredible things, it has quashed the representational variety of tools that people used to solve numerical problems in the past. I wanted to show how numbers are equally well-represented by lengths and angles, and how replacing momentary computation of a single solution with continuous solving across the problem space can yield tangible, experiential benefits to a user by exposing the dynamic relationship between angles and lengths in a triangle.

I’m eager to have compelling, intrinsically spatial examples of webXR to help communicate the benefits of the platform. In particular, I’m fascinated by exploring the full variety of the ways we can understand the inputs and outputs in an immersive system. We’re so accustomed to using computing environments like no-one is looking, so I am intrigued by introducing agents that observe and respond to your full-body pose in a social setting. I also wanted to begin developing rich synthesized audio capability to deliver multidimensional feedback with more nuance than the simple playback of static audio samples.

While most of my career has involved discovering novel experiences for existing hardware platforms, I have found times when a question is too pressing for me to wait for other solutions. My expertise in both traditional 3D modeling and in parametric graphics programming has made it a productive and exciting exploration.

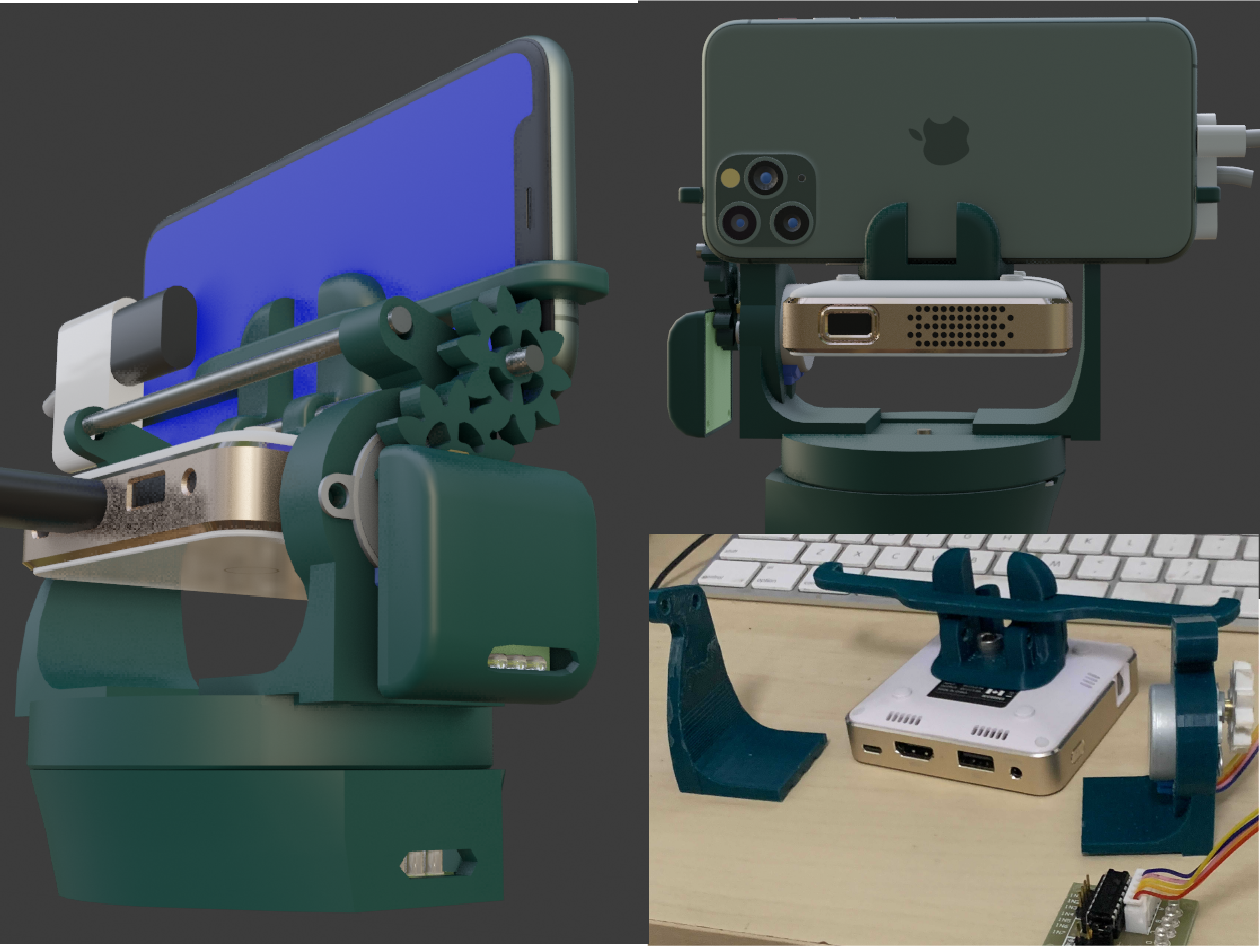

After spending a long time on VR and thinking about AR, I’ve been troubled by the exclusivity of the dominant forms of the interaction - in both interactions that are phone-based and those in any form of headset still put those in the environment but without the technology themselves at a significant disadvantage. On top of that, the level of user friction in opting to use either device is a significant stumbling block to support the simple, effortless support that true augmented could support. After finally buying a pico projector I wanted to explore a form factor that could render AR in a way that is both socially inclusive and has a chance to support the most mundane calls for digital mediation a user might have.

I read about this concept in Wired and immediately felt the need to play with it on my own - to understand the mechanics of how it might work, how a user would feel about putting it on, and how deeply the impact of such augmentation might be felt. Even knowing the weight of the device and having the ability to tweak the way the stimulus rolled around it was fabulously informative for understanding what it might be good for. Being responsible for the development of the entire platform gave me an even better appreciation for all the choices available for how it might work, and the experiential consequences those choices might have.

After building the compass belt and hacking a webcam to be IR sensitive, I wanted to construct software and hardware that was genuinely useful to my family’s life. Having control over the platform let me overlay additional features like accepting Skype calls and recording feeding times seamlessly as their need became clear, and with a peace of mind that the platform was private and wasn’t doing anything I didn’t want it to!

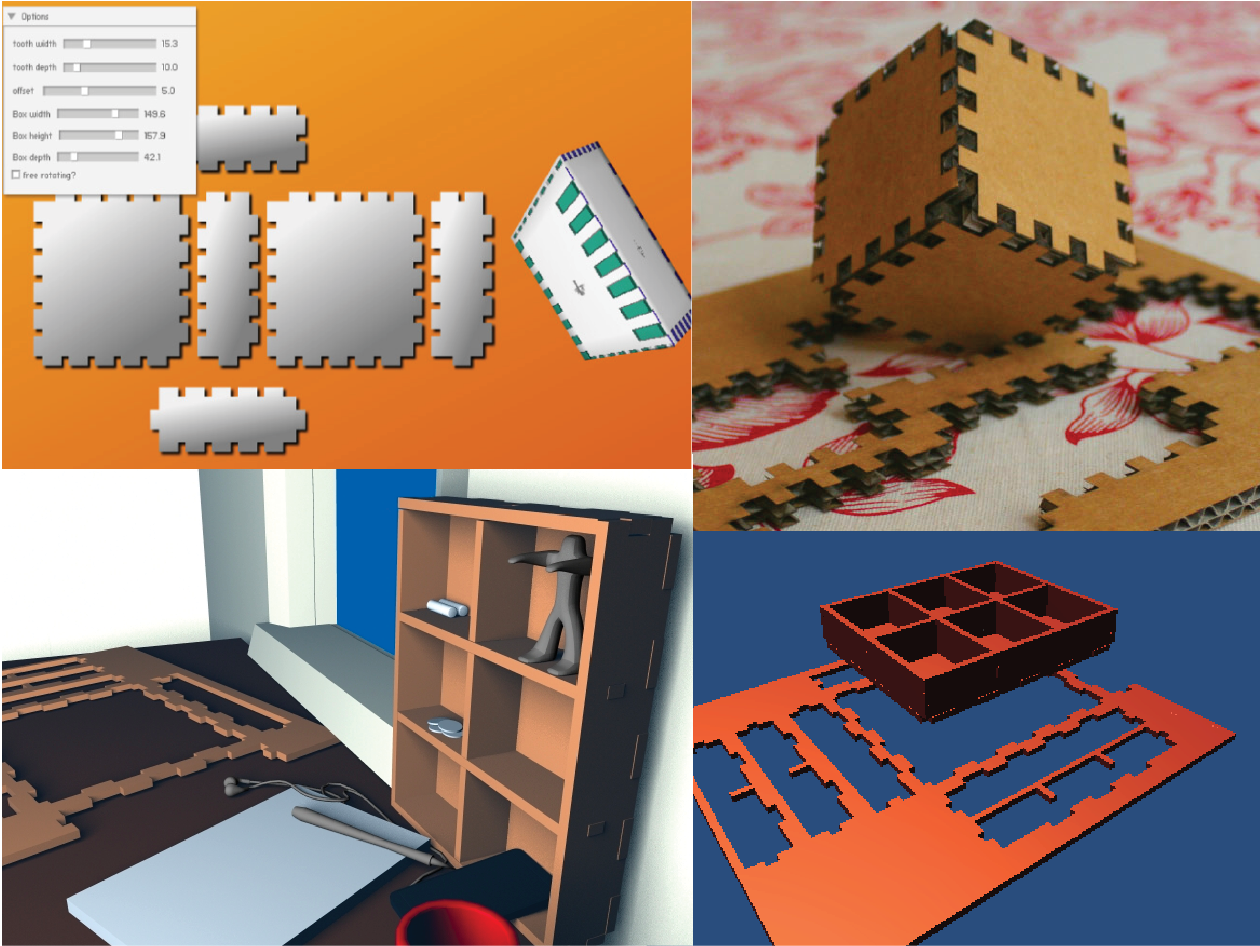

After being contracted to develop a system for 3D preview of laser-cut parts, I became interested in the challenges of hobbyist digital fabrication, and curious about what would be the most useful things to make. I learned the general principles of good fabrication and found that most fabrication communities focus on arcane implementation details. I wanted to make a tool that freed up casual users to think more about their own desires. It was an object lesson in how unimportant those details are to the public, as long as it works!

When I joined Apple, our website represented software and hardware exclusively with static images or expensive video sequences. In order to give our stories more flexibility, I created and shipped interactive, real-time visualizations of hardware and software to be reused effortlessly wherever appropriate. In addition to the technical demands, that required learning an immense amount about how to work across a wide variety of teams and problems. I’ve learned how to pursue the root cause of why things are a certain way, and to pull alternatives gently but persistently into the realm of the possible.

Since 2014 I have been creating real-time, interactive graphics for explaining ideas and showing Apple’s products. I have worked closely with teams in 3D production, HI, Industrial Design and Safari to find representations that are beautiful and truthful through implementations that are flexible and expressive. Apple.com is a critical showcase of our offerings, reaching millions a day across nearly 100 locales and a dozen languages. In order to build these executions at the speed of thought, and to validate which constraints are appropriate, I have also built a series of tools to help us think.

After learning enough about shader programming to make them myself, I wanted a tool to help present the different aspects of their function at the appropriate level of abstraction. Stradivari lets me focus on the conceptual development of a visualization, and has had the added benefit of being an excellent environment for teaching and learning about graphics concepts.

Marcom is a creative-led organization, which can be in tension with the collection of relevant quantitative data about how its products - marketing material on Apple products and services - are used and consumed. I wanted to show that rich quantitative insight was possible while remaining simple enough to follow, without shutting the door on creative discovery.

I believe that writing is fundamentally a technology. That means it can be innovated upon or even replaced entirely. I want to tease apart the distinction between the underlying concepts that written communication conveys, the various technical implementations that convey it, and the experiential impact of having it conveyed.

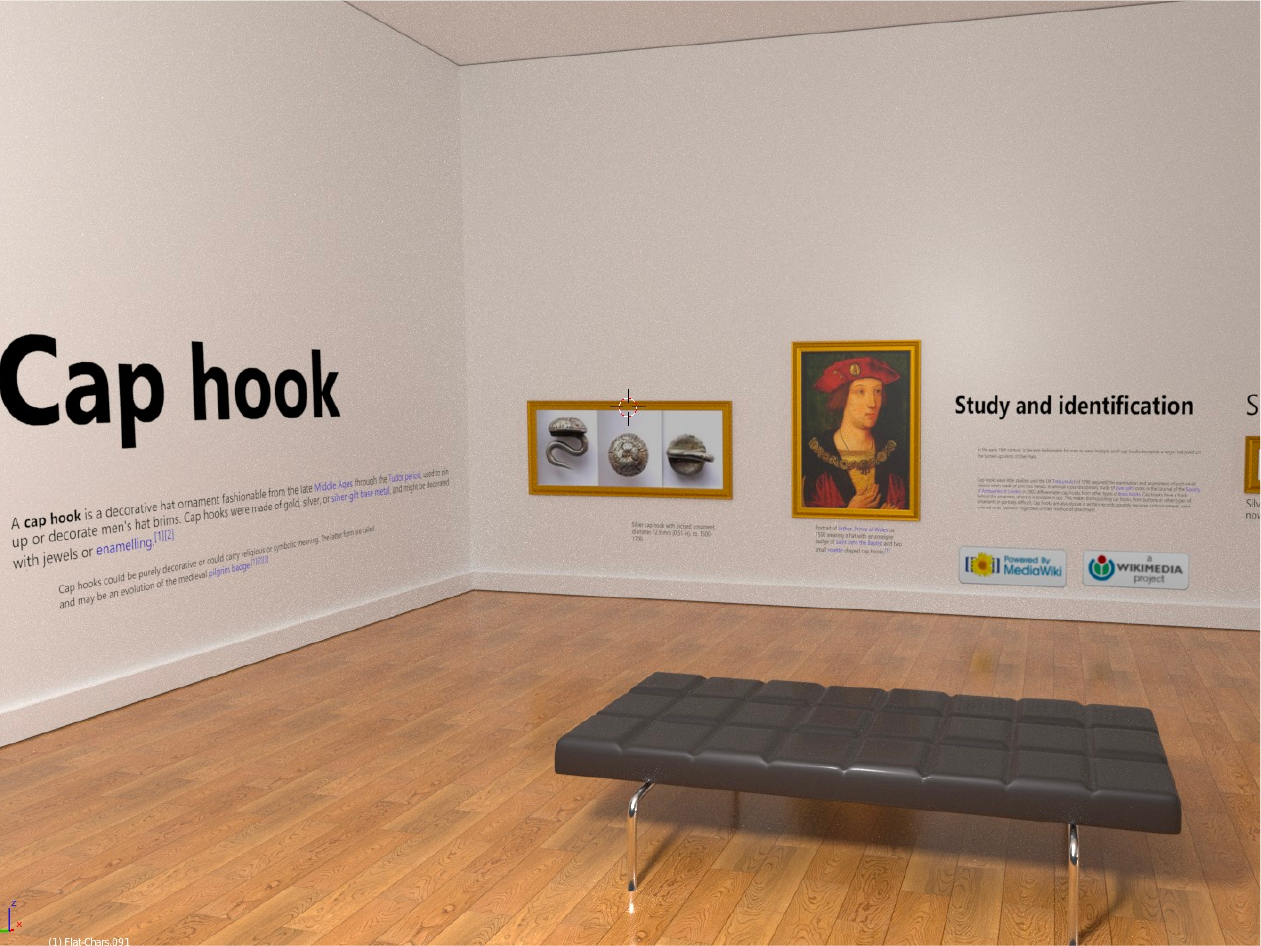

Reading and information processing involves a complex translation of symbols into ideas. While we have many different modalities for doing this - books, museums, class and lectures etc - much of our digitally-mediated interaction relies on the most restrictive modalities inherited from books, with little of the mobility and flexibility that paper affords. I have explored the effect of spatializing information at room scale, borrowing from some established norms in gallery and museum design to render the full richness of Wikipedia as an exhibition.

After visiting some famous museums in London in 2022, I was struck by the visibility of the information architecture of the spaces - at times more so than the structural architecture. Following the earlier Wikipedia-as-gallery exploration, I wanted to imagine what a more carefully-constructed space could do for a specific piece of information, as a clue t o how future systems might maximize the comprehensibility of a given “text,” in whatever form that might take.

This project came in three parts. First I wanted to view Wikipedia articles as timelines that could clarify the passage of time as a factor in the stories told through the site. I then wanted to overlay one timeline over another in the way that experts might leverage their general understanding of important chronologies, e.g. civil rights, the Cold War etc. on a new subject of interest. Finally, while it would be possible to explore these ideas in 2D on a screen, the need for a lot of space makes it the perfect candidate to prototype in a more immersive environment.

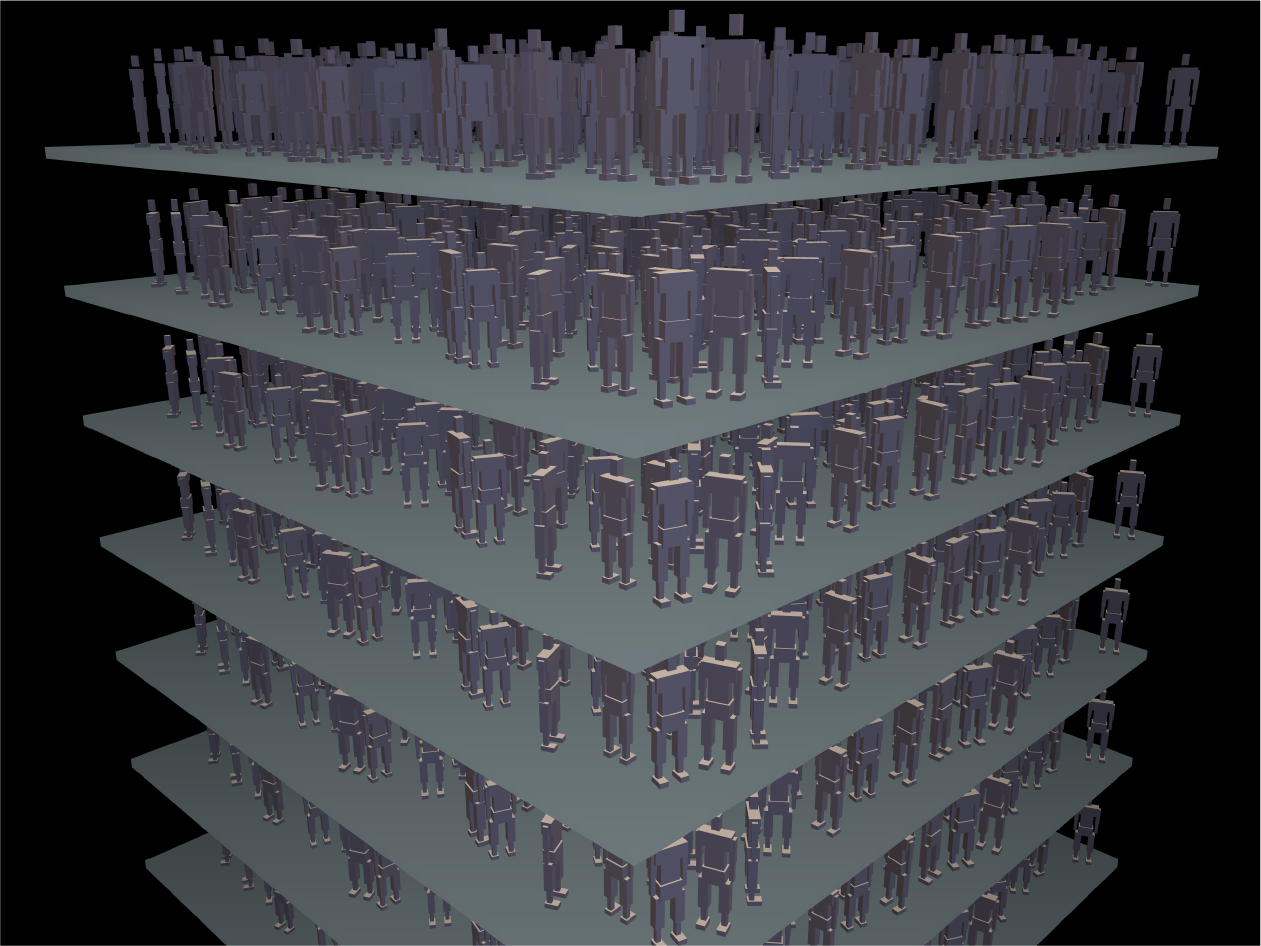

While we can read numerals, our ability to understand the numbers and quantities they represent is more limited than we typically believ. Being able to effortlessly create visual representations of any quantity - people, mass, time, money etc - gives us the opportunity to have a richer and more comprehensive understanding of that specific quantity and the role it plays in the stories we tell.

Through more than 30 years of using a computer for programming, communication and artistic purposes, I’m aware of the way that tools can help at very different levels of abstraction. Despite technical improvements over that time, we don’t seem to be closer to grasping that everything - basic natural language included - can be augmented in the same way as pixels or polygons.

Writing is a technology, and I am fascinated by its history and impact on thought. The state of technology today allows us to reimagine the process and function of writing. Doing so may yield the greatest technological benefits of our lifetime. These are some of my explorations to that end.

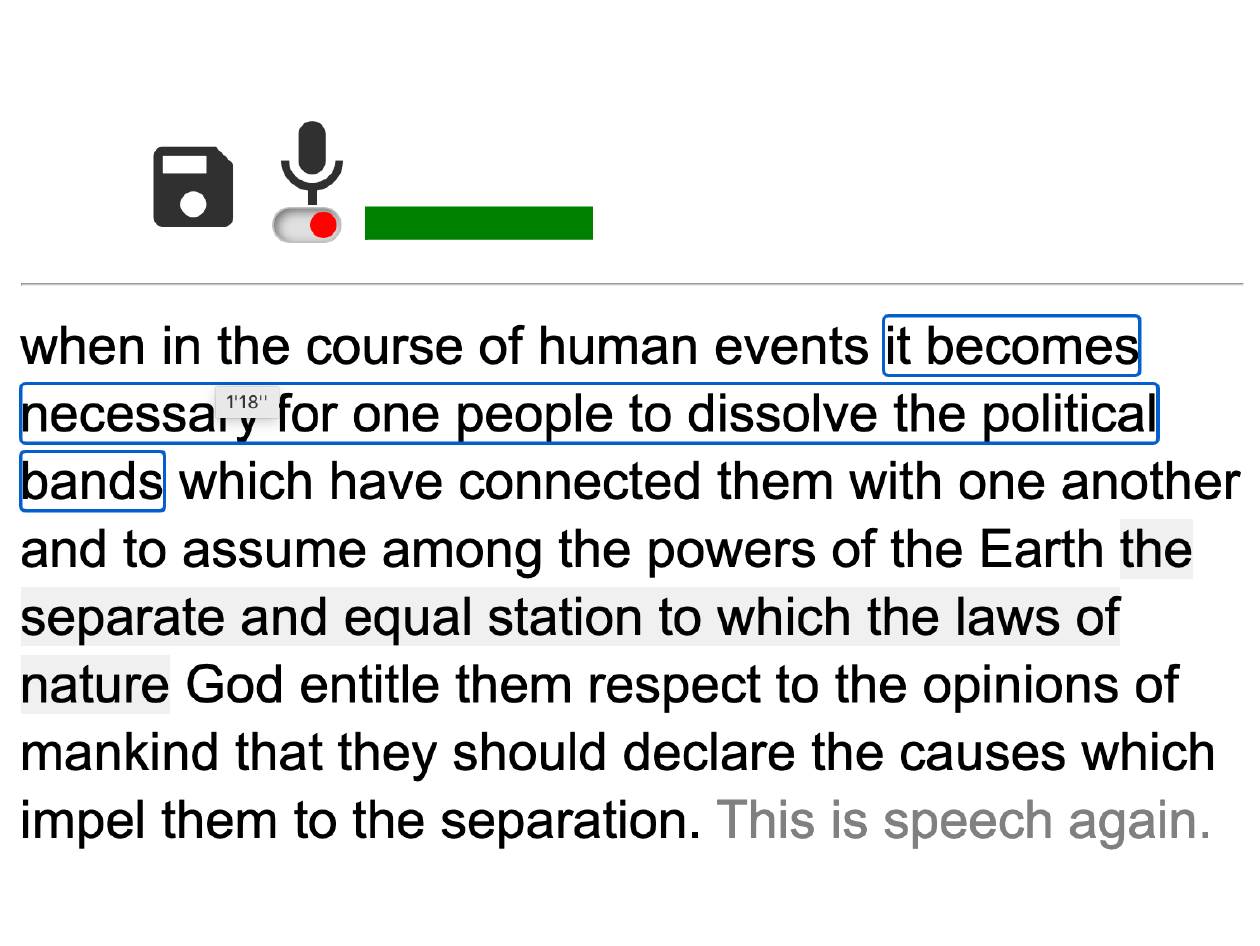

I have tried to use dictation for text input for over 20 years, but it’s still flawed in ways that make it hard to commit to using to replace typing. If I’m not monitoring the results as they appear, compounding errors can render my original intent indecipherable. If I monitor the input, correcting the slight errors here and there can break my flow of concentration as I continually switch from expressing concepts to editing words. I wanted to design an approach that was realistic about the state of the art and preserved the necessary artifacts to correct errors where they occur.

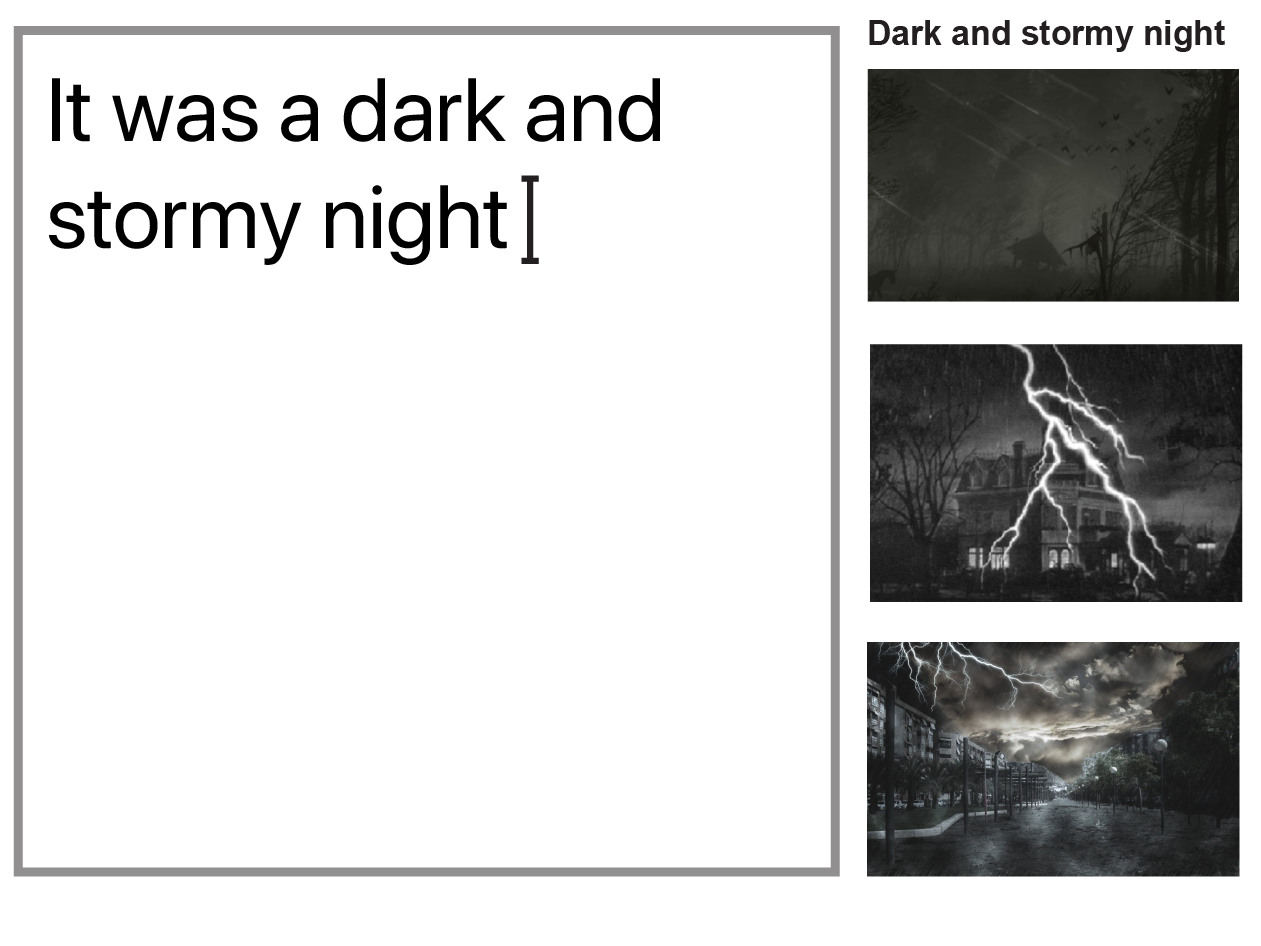

When people imagine places or things, the sense of clarity they have about the images that come to mind is often illusory - this becomes clear when asked for further details about the images being imagined. I wanted to explore the consequence of having imagery invoked directly within the context of creative writing - how that would change a writer’s grasp of those details, and how the act of writing changes when it includes the ability to invoke images automatically alongside text.

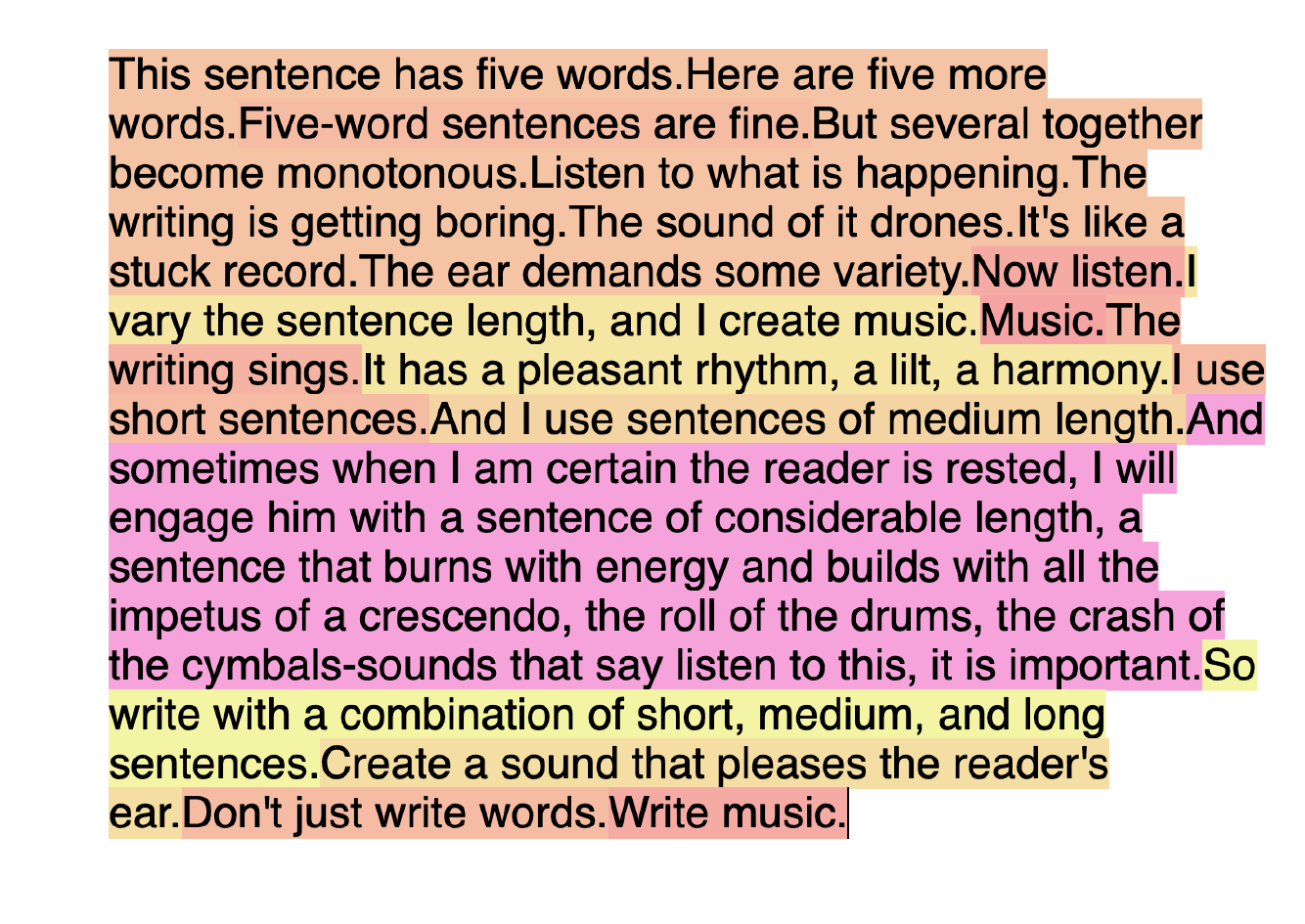

Text can have properties we can all agree on like tone, complexity and logical coherence - but those properties aren’t visible without careful reading. I wanted to create automatic, glanceable representations of these higher-level properties to explore how computational power can support a higher-level understanding of the ideas we create - and particularly arrange and edit - in textual form.

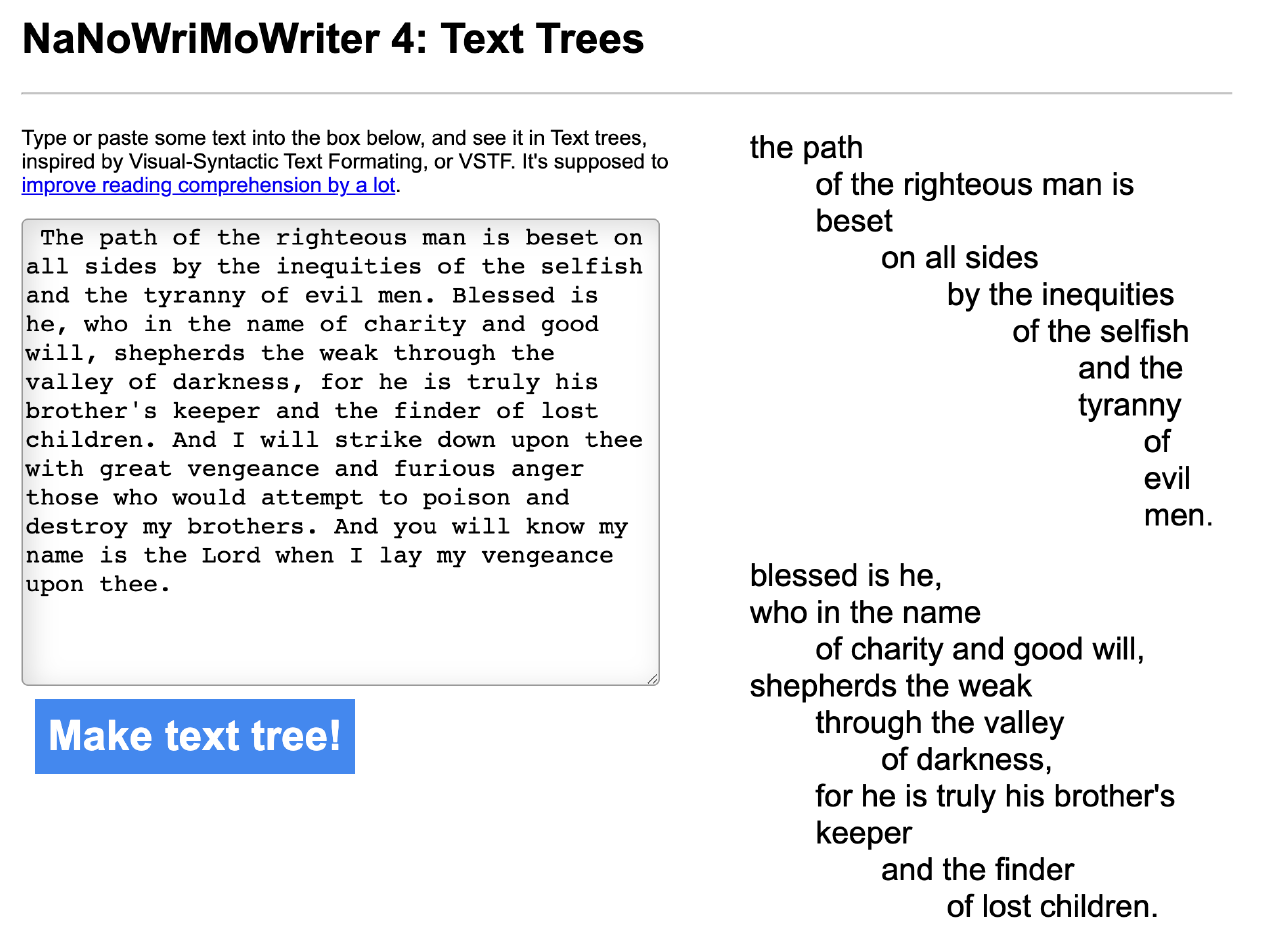

The current representation of text is the result of compromises between economy and tangibility over centuries of technological development and shifting platforms. The printing press placed limits on the number of glyphs and typefaces we use, the linotype machine set expectations for line length, economies of distribution impacted information density and the balance of how whitespace was employed on the printed page. We now have the benefit of near-infinite representational flexibility, and after coming across VSTF I wanted to explore the benefit of using more space, and arranging text for the benefit of comprehension and recall.

While dictation engines have been moderately successful in encoding words, no systems currently allow users to add or change punctuation without a keyboard. With an eye to reducing our reliance on keyboards for nuanced expression, I am interested in gestural alternatives, and in exploring ways to express aspects of editing concepts gesturally.

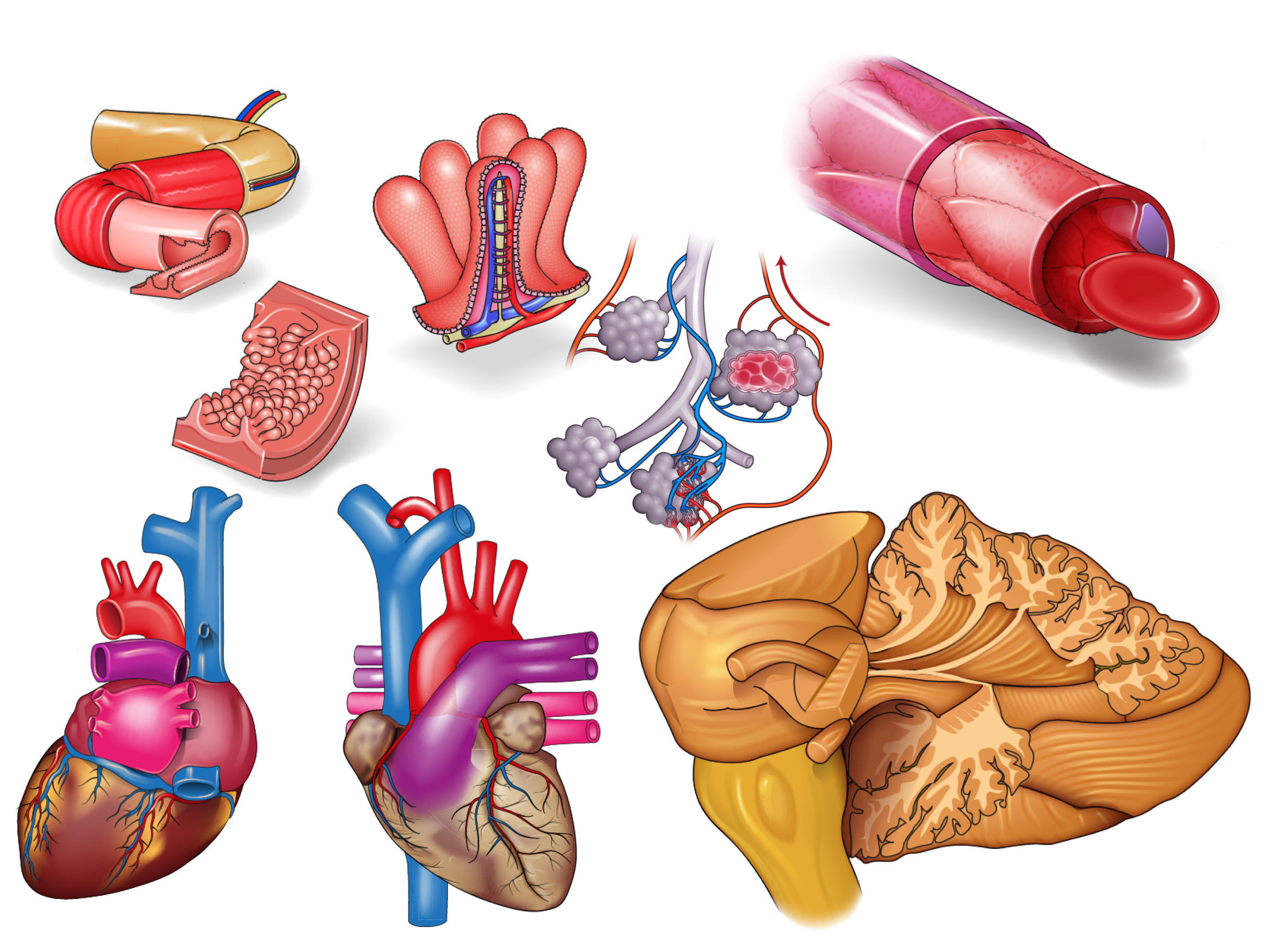

Learning about the human body led me into learning a lot about the human perceptual system, and about the quantitative methods that biology has available to measure aspects of them. This was a thrilling introduction to the opportunities for novel human-computer interaction.

From 2005-2014 I produced instructional illustrations for a medical teaching company. In that time I developed a deep interest in both human physiology and the visual communication techniques required to focus on the relevant details of the dizzying complexity of biological systems. My understanding of perception and reasoning was significantly strengthened by access to domain experts to discuss details of the subject material in depth.